All Articles

How AI Could Benefit Workers, Even If It Displaces Most Jobs

AI is already taking jobs, but that is only one facet of its complex economic effects. Price dynamics and bottlenecks indicate that automation could be good news for workers — but only if it vastly outperforms them.

artificial intelligence, automation, job displacement, labor markets, productivity gains, price effects, bottlenecks, Baumol's cost disease, agricultural mechanization, computers and software, wage share, economic growth, full automation, inequality

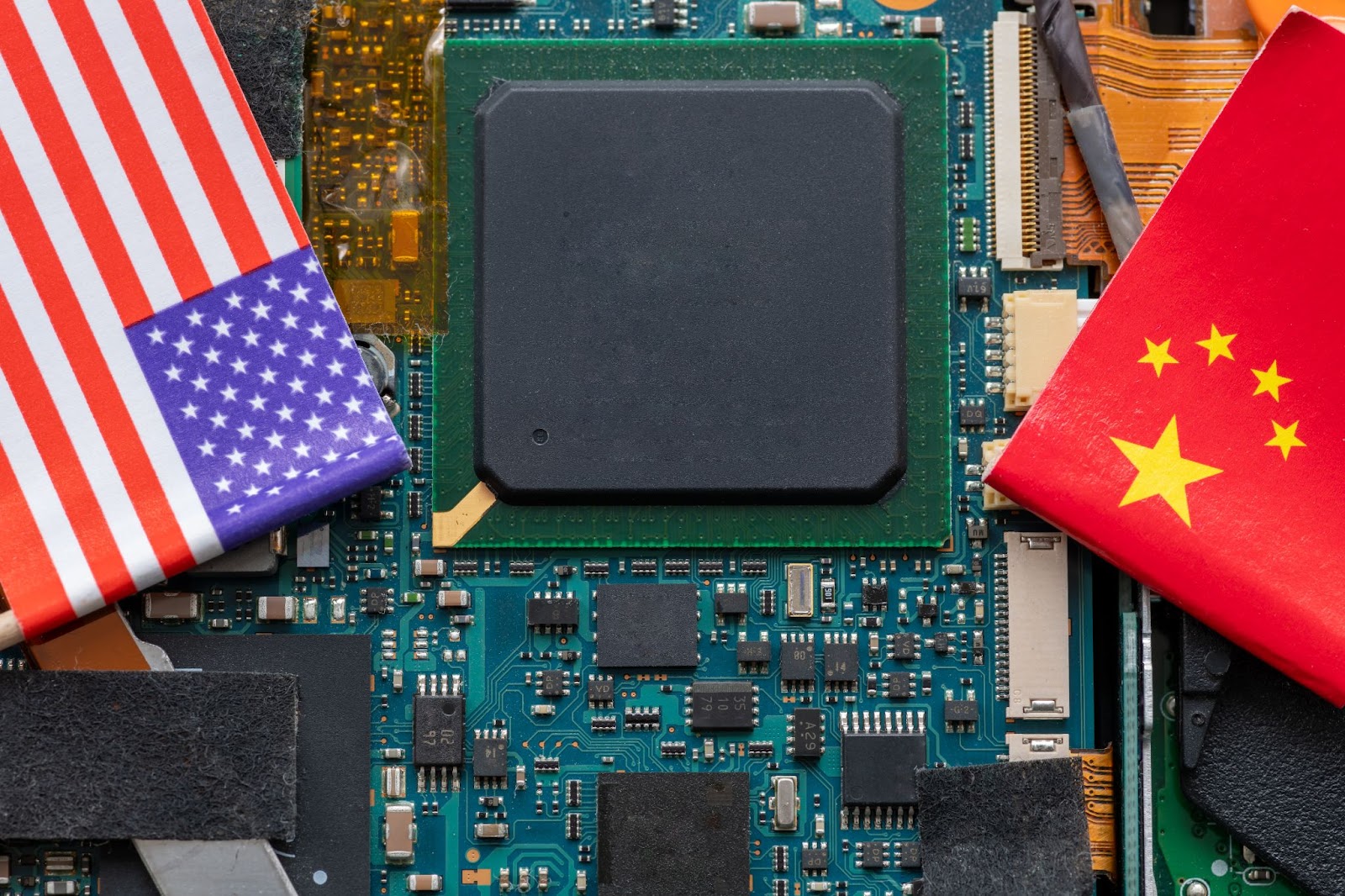

China and the US Are Running Different AI Races

Shaped by a different economic environment, China’s AI startups are optimizing for different customers than their US counterparts — and seeing faster industrial adoption.

China AI startups, US AI startups, AI investment gap, Hong Kong IPOs, Biren Technology, Zhipu AI, MiniMax, OpenAI Stargate, AI infrastructure spending, inference efficiency, Mixture-of-Experts models, industrial AI deployment, manufacturing AI adoption, enterprise AI solutions, AI monetization models

High-Bandwidth Memory: The Critical Gaps in US Export Controls

Modern memory architecture is vital for advanced AI systems. While the US leads in both production and innovation, significant gaps in export policy are helping China catch up.

high-bandwidth memory, HBM, DRAM, AI chips, GPU packaging, export controls, Bureau of Industry and Security, BIS, U.S.-China tech competition, semiconductor manufacturing equipment, FDPR, ASML immersion DUV lithography, SK Hynix, Samsung, Micron

Making Extreme AI Risk Tradeable

Traditional insurance can’t handle the extreme risks of frontier AI. Catastrophe bonds can cover the gap and compel labs to adopt tougher safety standards.

frontier AI, extreme AI risk, catastrophic AI events, AI liability, liability insurance, catastrophe bonds, cat bonds, insurance-linked securities, capital markets, AI regulation, AI safety standards, third-party audits, catastrophic risk index, tail risk, systemic risk

Exporting Advanced Chips Is Good for Nvidia, Not the US

The White House is betting that hardware sales will buy software loyalty — a strategy borrowed from 5G that misunderstands how AI actually works.

AI Could Undermine Emerging Economies

AI automation threatens to erode the “development ladder,” a foundational economic pathway that has lifted hundreds of millions out of poverty.

The Evidence for AI Consciousness, Today

A growing body of evidence means it’s no longer tenable to dismiss the possibility that frontier AIs are conscious.

AI Alignment Cannot Be Top-Down

Community Notes offers a better model — where citizens, not corporations, decide what “aligned” means.

AI alignment, attentiveness, Community Notes, Taiwan, Audrey Tang, model specification, deliberative governance, epistemic security, portability and interoperability, market design, Polis, reinforcement learning from community feedback, social media moderation, civic technology

AGI's Last Bottlenecks

A new framework suggests we’re already halfway to AGI. The rest of the way will mostly require business-as-usual research and engineering.

AGI, artificial general intelligence, AGI definition, GPT-5, GPT-4, visual reasoning, world modeling, continual learning, long-term memory, hallucinations, SimpleQA, SPACE benchmark, IntPhys 2, ARC-AGI, working memory

AI Will Be Your Personal Political Proxy

By learning our views and engaging on our behalf, AI could make government more representative and responsive — but not if we allow it to erode our democratic instincts.

AI political proxy, direct democracy, generative social choice, ballot initiatives, voter participation, democratic representation, AI governance, Rewiring Democracy, Bruce Schneier, Nathan E. Sanders, policy automation, civic engagement, rights of nature, disenfranchised voters, algorithmic policymaking

Is China Serious About AI Safety?

China’s new AI safety body brings together leading experts — but faces obstacles to turning ambition into influence.

China AI Safety and Development Association, CnAISDA, China AI safety, World AI Conference, Shanghai AI Lab, Frontier AI risk, AI governance, international cooperation, Tsinghua University, CAICT, BAAI, Global AI Governance Action Plan, AI Seoul Summit commitments, Concordia AI, Entity List

AI Deterrence Is Our Best Option

A response to critiques of Mutually Assured AI Malfunction (MAIM).

AI deterrence, Mutually Assured AI Malfunction, MAIM, Superintelligence Strategy, ASI, intelligence recursion, nuclear MAD comparison, escalation ladders, verification and transparency, redlines, national security, sabotage of AI projects, deterrence framework, Dan Hendrycks, Adam Khoja

Summary of “If Anyone Builds It, Everyone Dies”

An overview of the core arguments in Yudkowsky and Soares’s new book.

If Anyone Builds It Everyone Dies, Eliezer Yudkowsky, Nate Soares, MIRI, AI safety, AI alignment, artificial general intelligence, artificial superintelligence, AI existential risk, Anthropic deceptive alignment, OpenAI o1, Truth_Terminal, AI moratorium, book summary, Laura Hiscott

AI Agents Are Eroding the Foundations of Cybersecurity

In this age of intelligent threats, cybersecurity professionals stand as the last line of defense. Their decisions shape how humanity contends with autonomous systems.

AI agents, AI identities, cybersecurity, identity governance, zero trust, least privilege, rogue AI, autonomous systems, enterprise security, trust networks, authentication and authorization, RAISE framework, identity security, circuit breakers

Precaution Shouldn't Keep Open-Source AI Behind the Frontier

Invoking speculative risks to keep our most capable models behind paywalls could create a new form of digital feudalism.

open-source AI, frontier models, precautionary policy, digital feudalism, OpenAI, Meta, Llama, GPT-OSS, regulation, open development, AI risk, legislation, policy debate, Berkman Klein Center

The Hidden AI Frontier

Many cutting-edge AI systems are confined to private labs. This hidden frontier represents America’s greatest technological advantage — and a serious, overlooked vulnerability.

hidden frontier AI, internal AI models, AI security, model theft, sabotage, government oversight, transparency, self-improving AI, AI R&D automation, policy recommendations, national security, RAND security levels, frontier models, AI governance, competitive advantage

Uncontained AGI Would Replace Humanity

The moment AGI is widely released — whether by design or by breach — any guardrails would be as good as gone.

AGI, artificial general intelligence, open-source AI, guardrails, uncontrolled release, existential risk, humanity replacement, security threat, proliferation, autonomous systems, alignment, self-improving intelligence, policy, global race, tech companies

Superintelligence Deterrence Has an Observability Problem

Mutual Assured AI Malfunction (MAIM) hinges on nations observing one another's progress toward superintelligence — but reliable observation is harder than MAIM's authors acknowledge.

MAIM, superintelligence deterrence, Mutual AI Malfunction, observability problem, US-China AI arms race, compute chips data centers, strategic sabotage, false positives, false negatives, AI monitoring, nuclear MAD analogue, superintelligence strategy, distributed R&D, espionage escalation, peace and security

Open Protocols Can Prevent AI Monopolies

With model performance converging, user data is the new advantage — and Big Tech is sealing it off.

open protocols, AI monopolies, Anthropic MCP, context data lock-in, big tech, APIs, interoperability, data portability, AI market competition, user context, model commoditization, policy regulation, open banking analogy, enshittification

In the Race for AI Supremacy, Can Countries Stay Neutral?

The global AI order is still in flux. But when the US and China figure out their path, they may leave little room for others to define their own.

AI race, US-China competition, middle powers, export controls, AI strategy, militarization, economic dominance, compute supply, frontier models, securitization, AI policy, grand strategy, geopolitics, technology diffusion, national security

How AI Can Degrade Human Performance in High-Stakes Settings

Across disciplines, bad AI predictions have a surprising tendency to make human experts perform worse.

AI, human performance, safety-critical settings, Joint Activity Testing, human-AI collaboration, AI predictions, aviation safety, healthcare alarms, nuclear power plant control, algorithmic risk, AI oversight, cognitive systems engineering, safety frameworks, nurses study, resilient performance

How the EU's Code of Practice Advances AI Safety

The Code provides a powerful incentive to push frontier developers toward measurably safer practices.

EU Code of Practice, AI Act, AI safety, frontier AI models, risk management, systemic risks, 10^25 FLOPs threshold, external evaluation, transparency requirements, regulatory compliance, general-purpose models, European Union AI regulation, safety frameworks, risk modeling, policy enforcement

How US Export Controls Have (and Haven't) Curbed Chinese AI

Six years of export restrictions have given the U.S. a commanding lead in key dimensions of the AI competition — but it’s uncertain if the impact of these controls will persist.

chip, chips, china, chip export controls, China semiconductors, hardware, AI hardware policy, US technology restrictions, SMIC, Huawei Ascend, Nvidia H20, AI infrastructure, high-end lithography tools, EUV ban, domestic chipmaking, AI model development, technology trade, computing hardware, US-China relations

Nuclear Non-Proliferation Is the Wrong Framework for AI Governance

Placing AI in a nuclear framework inflates expectations and distracts from practical, sector-specific governance.

A Patchwork of State AI Regulation Is Bad. A Moratorium Is Worse.

Congress is weighing a measure that would nullify thousands of state AI rules and bar new ones — upending federalism and halting the experiments that drive smarter policy.

ai regulation, state laws, federalism, congress, policy innovation, legislative measures, state vs federal, ai governance, legal framework, regulation moratorium, technology policy, experimental policy, state experimentation, federal oversight, ai policy development

.png)

.jpg)