AI Alignment Cannot Be Top-Down

Community Notes offers a better model — where citizens, not corporations, decide what “aligned” means.

.png)

.png)

In March 2024, I opened Facebook and saw Jensen Huang’s face. The Nvidia CEO was offering investment advice, speaking directly to me in Mandarin. Of course, it was not really Huang. It was an AI-generated scam, and I was far from the first to be targeted: across Taiwan, a flood of scams was defrauding millions of citizens.

We faced a dilemma. Taiwan has the freest internet in Asia; any content regulation is unacceptable. Yet AI was being used to weaponize that freedom against the citizenry.

Our response — and its success — demonstrates something fundamental about how AI alignment must work. We did not ask experts to solve it. We did not let a handful of researchers decide what counted as “fraud.” Instead, we sent 200,000 random text messages asking citizens: what should we do together?

Four hundred forty-seven everyday Taiwanese — mirroring our entire population by age, education, region, occupation — deliberated in groups of 10. They were not seeking perfect agreement but uncommon ground — ideas that people with different views could still find reasonable. Within months, we had unanimous parliamentary support for new laws. By 2025, the scam ads were gone.

This is what I call attentiveness: giving the people real, ongoing power to steer technology. It is the foundation of how Taiwan has aligned AI with our society. And it is the missing ingredient in global AI alignment efforts.

In technical terms, alignment means ensuring that AI systems act in accordance with human values and intentions. But, as Taiwan’s experience with AI deception shows, alignment cannot be defined in the abstract; it depends on context. Choices that guide how AI systems respond — for instance, prioritizing freedom of expression — can also make them prone to harmful uses, such as scams and disinformation. True alignment demands navigating such tensions, deciding which values must take precedence in a given context. This can be done only by keeping AI’s development in continuous conversation with the societies where it is deployed.

But today’s dominant approach to AI alignment looks nothing like this. It is highly vertical, dominated by a limited number of actors within a few private AI corporations. These actors select the training data, set the optimal objectives, and unilaterally define what counts as “aligned” behavior. They publish high-level model specifications (e.g., “be helpful”), but operationalize and enforce them behind closed doors.

When unexpected behavior emerges, patch fixes are applied based solely on the developer’s judgment of risk and acceptability. The result is a system that interacts with billions of people but is, by default, controlled by a small circle of researchers and executives, while those most affected have almost no voice in shaping the outcomes.

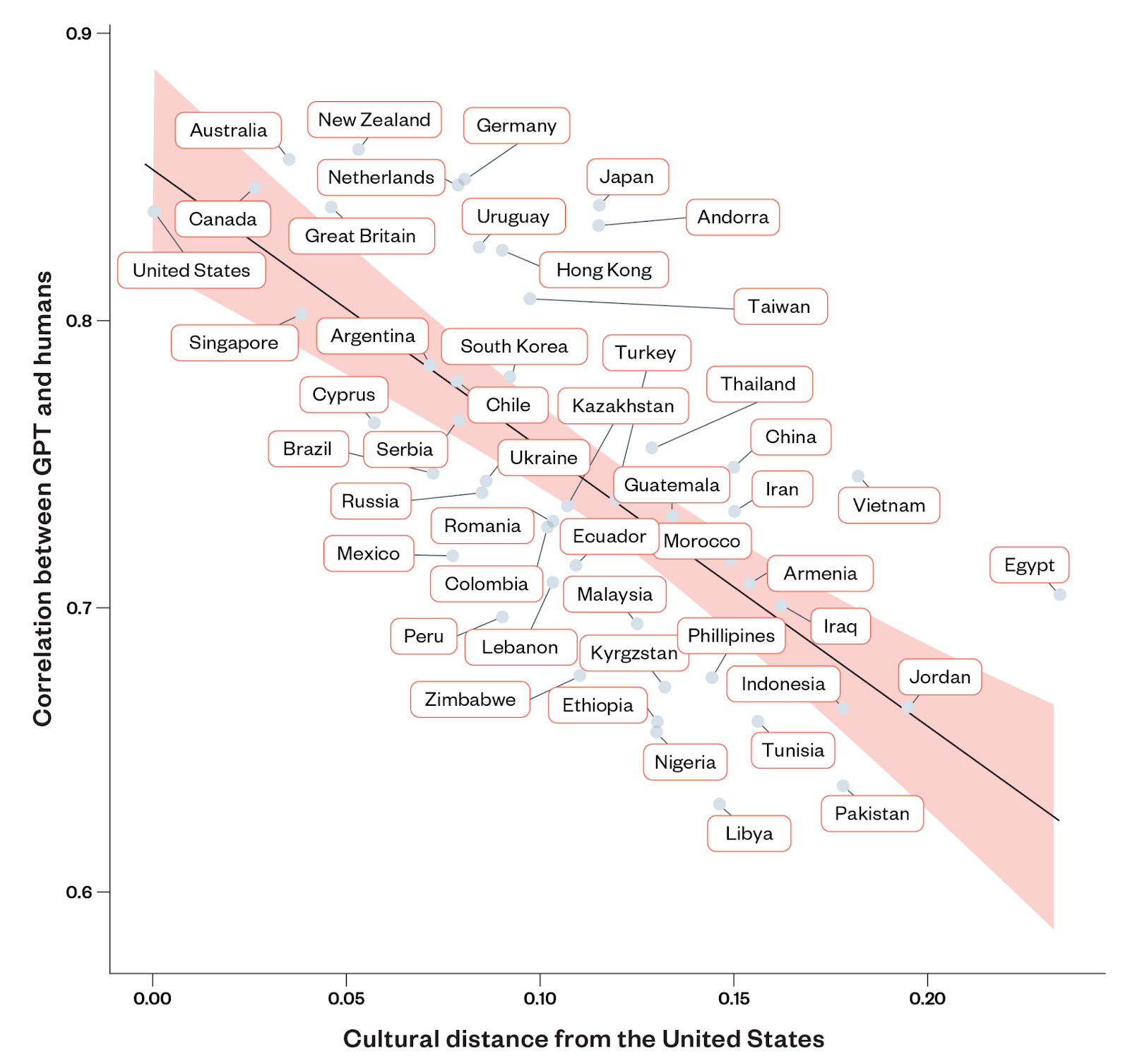

This centralized, globally optimized approach to alignment fundamentally underestimates the true complexity of AI. The world is populated with diverse, messy societies, each with its own historical and cultural context that produces different values and priorities. There is no principled reason to believe that a small group of individuals can determine what alignment means for everyone. Instead, alignment must be shaped by countless, locally contextualized judgments.

The risks of continuing this inattentive approach to alignment are severe. Today, leading AI models project the values of their makers. Once embedded into civic, economic, and governmental decision-making (drafting laws, grading exams, advising lawyers, screening welfare applications, or summarizing public consultations), these systems will do more than mislead: they will begin to redefine what a society treats as truth and whose experience qualifies as evidence, hollowing out the very institutions meant to uphold collective sense-making.

When the linguistic and moral frameworks of public reasoning are mediated by a handful of culturally uniform systems, democratic pluralism will erode.

With the current approach to AI alignment, we are seeing a repeat of the mistakes made in efforts to align social media. In the 2010s, platforms relied on centralized, top-down moderation: trust and safety teams wrote global rules and enforced them with automated filters and human review.

Trying to answer all relevant questions through centralized, upstream programming inevitably failed under the weight of real-world complexity — with tragic consequences. Facebook’s systems failed to stem military-run disinformation that fueled attacks against the Rohingya in Myanmar. Incremental fixes — warning labels, carve-outs, AI-enabled reviews — could not solve the structural problem inherent in a handful of decision-makers trying to govern billions of posts across diverse cultures.

The innovative breakthrough came when platforms began shifting power outward. Twitter’s Birdwatch, later X’s Community Notes, built attentiveness into the design: volunteers add clarifying notes, surfaced only when rated helpful by people with differing views. Transparency and plural participation became structural features, not afterthoughts. Community Notes is far from perfect, but it represents a move from centralized edicts to auditable, distributed steering power.

/inline-pitch-cta

This is exactly the kind of attentiveness that is lacking in current AI alignment efforts. Just as Community Notes democratized context in social media, the AI systems that will increasingly shape governance, the economy, and civic life must embed structured participation by those most affected. To enable course correction, they must continuously notice mismatches: who is being harmed, what needs are unmet, and where meaning is breaking down.

Just as a small circle of trust and safety officials could not steer global social media, no handful of researchers can successfully align general-purpose AI systems for the world.

Applying the principle of attentiveness would shift the field from pursuing centralized, primarily technical “solutions” toward democratic co-creation and governance. Without attentiveness, we risk building systems that entrench narrow values, pursue harmful goals at scale, or even escape meaningful human control altogether.

Fortunately, the tools needed to pursue a more attentive course already exist.

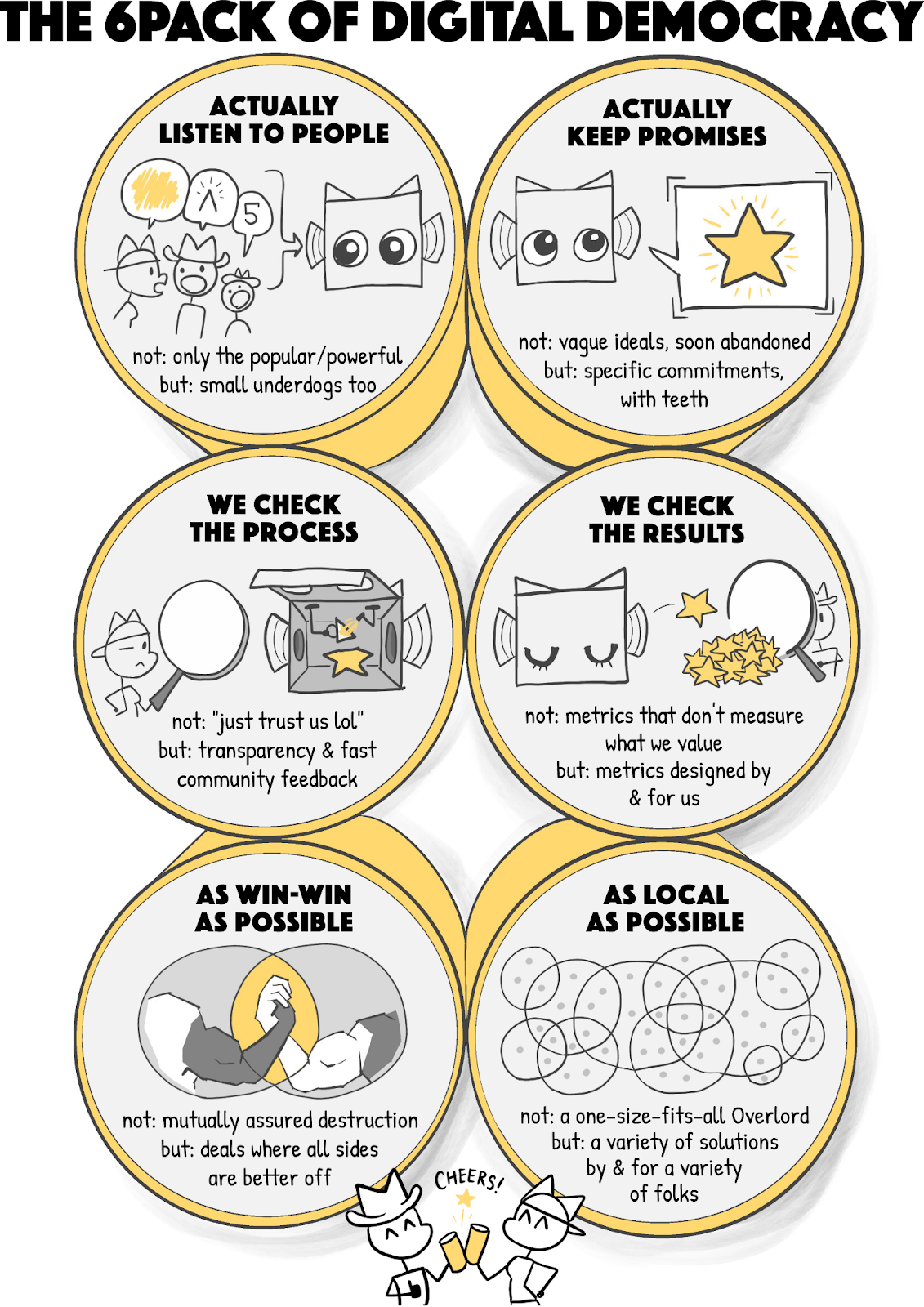

Attentiveness does not emerge by accident; it rests on an explicit ethical foundation. Building on University of Minnesota Prof. Joan Tronto’s care ethics, I, along with Caroline Emmer De Albuquerque Green (of the University of Oxford’s Institute for Ethics in AI), developed the 6-Pack of Care — six interlocking practices that translate ethical principles into institutional design. The framework recognizes a basic asymmetry: AI operates at speeds and scales beyond human oversight. To keep it aligned, our institutions must evolve to match that tempo by learning, responding, and recalibrating continuously, with people in the loop at every level.

Attentiveness is the essential ingredient, which is why we have placed it first in the 6-Pack of Care. Every other form of care depends on seeing clearly where need and impact arise.

Yet, today’s dominant approach to AI alignment is deeply inattentive.

So how can we turn these insights into practical systems for AI alignment at a global scale? This challenge will require action along three mutually reinforcing directions: industry norms, market policy, and community-scale assistants. Critically, these are not hypothetical. They are tested tools already in use today — piloted by AI companies, deployed in civic-tech systems, and trialed in limited jurisdictions. They show what scaled attentiveness can look like in practice.

As discussed, the current landscape of AI alignment is dominated by a handful of private corporations setting goals, selecting data, and defining “acceptable” behavior behind closed doors.

Attentiveness begins by opening that black box. When AI corporations make their reasoning legible to the public, alignment becomes a shared responsibility rather than a proprietary secret.

Some developers do publish various kinds of model constitutions and public specifications that define, in plain language, how a system is intended to behave, versioned like open-source code. Each clause represents a promise. Some prototypes are also testing citations at inference time, where an AI model’s outputs reference the policy clause that guided the reasoning behind an output — a lightweight but powerful auditing mechanism.

Once intentions, reasoning, and revisions are made public, outsiders — journalists, researchers, civic technologists — can test whether systems live up to their commitments. In doing so, they transform alignment from faith-based to verifiable, from a closed procedure into a visible, collective act of steering.

Once norms make AI behavior legible, the next challenge is ensuring that incentives reward those who act responsibly.

The way markets are structured shapes whether attentiveness is sustainable or self-defeating. Portability mandates allow users to move their data between platforms. This lowers switching costs for users who want to leave harmful platforms, incentivizing platforms to compete for users on the basis of care rather than capture. Procurement standards can compel governments to adopt more auditable systems, and subscription models allow companies to focus on user trust and community health instead of chasing ad-based revenue through sensational and divisive content.

Some jurisdictions are already moving in this direction. Utah’s Digital Choice Act (H.B. 418), for example, establishes greater user-data portability and interoperability for social media, requiring platforms to make user networks transferable across services. Similar proposals under discussion in Europe and the US Congress would extend this portability to AI ecosystems.

/odw-inline-subscribe-cta

Shifting market incentives in these ways can make attentiveness economically viable. When care becomes a competitive advantage, the business logic of AI begins to align with community values.

If norms set expectations and markets set incentives, community-scale AI assistants can make attentiveness tangible in daily civic life.

Where foundation models aim for generality, community-scale assistants are tuned to the specific histories, dialects, and norms of a community, serving as mediators between global technologies and local realities. Through systems like Reinforcement Learning from Community Feedback, which rewards models for output that people with opposing views find reasonable, community-scale assistants can transform disagreement into sense-making and problem-solving.

Platforms like Polis, a machine learning platform that performs real-time analysis of public votes to build consensus on policy debates, already reveal what this looks like in practice. When combined with integrity infrastructure — oversight by representative citizen bodies, verifiable personhood credentials, and transparent logs — AI-enabled mediation can fill the gaps that one-size-fits-all global models inevitably miss, making AI governance adaptive, pluralistic, and auditable in real time.

These levers of attentiveness will not matter if they stay confined to experiments in a few cities or labs. Steering requires scale; it needs to move from pilots to infrastructure that billions can rely on.

At a high level, sequencing matters. Frontier AI corporations must move first, opening systems with model specifications and clause-level transparency. Platforms must follow closely, creating APIs for bridging notes and adopting portability protocols, allowing communities and user histories to be carried across services. Regulators play a parallel role in defining the standards that make portability and interoperability possible, while civil society advances pluralism through representative citizen oversight and community-scale assistants.

Success, too, must be measurable: how quickly bridging notes arrive, how effectively they reduce polarization, how often outputs cite their governing rules, and how freely users can move across social networks and AI services. These metrics tell us whether attentiveness is working in practice, and where course corrections are needed.

Naturally, objections to this approach will arise, particularly regarding efficiency, value coherence, and coordination costs. Making attentiveness the foundation of our future AI alignment paradigm is a serious challenge, but one that is possible to meet.

As with any innovation, there are trade-offs to manage. Open models can lower the barrier for malicious use; but, when implemented as community-scale assistants with citizen-led integrity checks, they also extend protection to communities otherwise overlooked. Mandatory portability may disrupt entrenched players’ dominance, but it will also foster competition in a race to community-wide excellence. Collective steering might seem to slow progress, but lightweight tools like model specifications and reasoning-time citations actually accelerate iteration and trust.

First as a civic technologist, then as Taiwan’s digital minister, and now as its cyber ambassador, I have seen how years of civic-tech innovation and cross-sector collaboration in Taiwan have produced a system for attentive technological alignment.

And it works. With this steering wheel and attentive civic drivers, we have blunted polarization and kept our information ecosystem aligned with the Taiwanese people’s shared goals and values. No blanket suppression is needed. No amplification arms race. Just fast, transparent turns of the wheel by those on the front line of the impact.

But what Taiwan has built is not just a tool for defending against disinformation. Rather, it has built and tested a model system for broader democratic AI alignment — one that channels civic participation, transparency, and rapid response into alignment mechanisms.

The tools are here: public, portable, and pluralistic. They are not perfect, but they work, and they reinforce one another.

The real test is whether we can embed these practices into the everyday operation of AI. Give people the steering wheel. We, the people, are the alignment system we have been waiting for.

See things differently? AI Frontiers welcomes expert insights, thoughtful critiques, and fresh perspectives. Send us your pitch.

Modern memory architecture is vital for advanced AI systems. While the US leads in both production and innovation, significant gaps in export policy are helping China catch up.

Traditional insurance can’t handle the extreme risks of frontier AI. Catastrophe bonds can cover the gap and compel labs to adopt tougher safety standards.