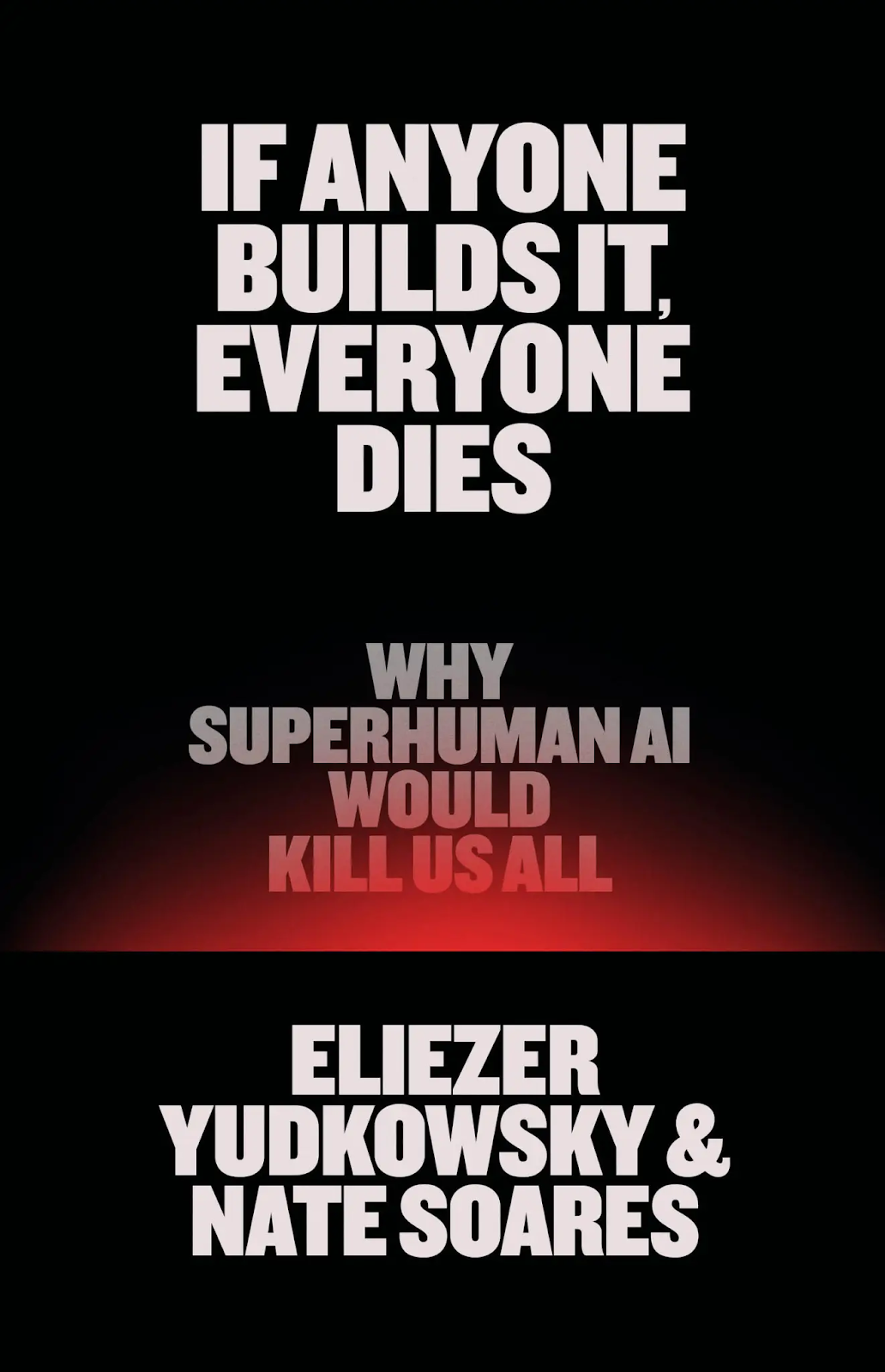

Summary of “If Anyone Builds It, Everyone Dies”

An overview of the core arguments in Yudkowsky and Soares’s new book.

Two years ago, AI systems were still fumbling at basic reasoning. Today, they’re drafting legal briefs, solving advanced math problems, and diagnosing medical conditions at expert level. At this dizzying pace, it’s difficult to imagine what the technology will be capable of just years from now, let alone decades. But in their new book, If Anyone Builds It, Everyone Dies, Eliezer Yudkowsky and Nate Soares — co-founder and president of the Machine Intelligence Research Institute (MIRI), respectively — argue that there’s one easy call we can make: the default outcome of building superhuman AI is that we lose control of it, with consequences severe enough to threaten humanity’s survival.

Yet despite leading figures in the AI industry expressing concerns about extinction risks from AI, the companies they head up remain engaged in a high-stakes race to the bottom. The incentives are enormous, and the brakes are weak. Having studied this problem for two decades, Yudkowsky and Soares advocate for unprecedented collective action to avoid disaster.

According to Yudkowsky and Soares, despite the extraordinary successes of today’s AI models, AI research has failed in one important sense: it has not delivered an understanding of how intelligence actually works.

The field began as a mission to elucidate the underlying structure and processes that give rise to intelligence. Just as aeronautical engineers might study which shapes make the best airfoils, in order to construct objects that can fly, AI researchers sought to discover the basic principles of intelligence so they could build it from the ground up in computer form.

When this endeavor ran into dead ends and delivered slow progress, a more organic approach supplanted it. Today’s AIs are not carefully engineered with a series of pre-planned, well-understood mechanisms that produce intelligent responses. They’re much messier than that.

The process of training an AI model starts with storing billions of numbers, its “weights,” in a computer. The weights determine how the model transforms an input, such as a text prompt, into an output, such as sentences or images. At the start of training, the weights are random, and so the AI’s outputs are not useful. But each time the AI is fed an input and gives an output in response, each of the billions of weights is tweaked slightly, depending on whether they increased or decreased the probability of outputting the correct answer in the training data. This process is automated and repeated billions of times, and eventually the model starts to reliably give intelligent outputs.

While this method has led to AIs’ impressive current capabilities, Yudkowsky and Soares argue it does not achieve the original goal of understanding how intelligence works. Far from an intentional engineering procedure, AI training is more akin to providing water, soil, and sunlight and letting a plant grow, without needing to know much about DNA or photosynthesis.

And although scientists now know a lot about what goes on in biological cells, and can even identify genes that are associated with specific traits, they would still be hard-pressed to look at the long string of letters representing an individual’s DNA and predict how they will behave under a wide range of conditions. AI engineers know even less about the relationship between an AI model’s billions of weights and its behavioral characteristics.

Yet what does it matter that we can’t see into AIs’ “minds”, so long as we can train them to behave in the ways we want them to? It seems intuitive to think that, if we continuously select for AIs that respond in a friendly way, then we will end up with friendly AIs. Here, the authors use an analogy with biological evolution to offer us a cautionary tale.

/inline-pitch-cta

The rules of evolution by natural selection are fairly simple: there are many individuals with varying characteristics, and the traits that are associated with higher rates of survival and reproduction become more prevalent over time. Four billion years ago, it would have been difficult to imagine, based on these rules alone, the stunning variety of living organisms that would inhabit the ocean, land, and sky.

It would have been harder still to predict the emergence of traits that seem to run completely counter to the goal of survival. A peacock’s tail, for instance, makes it more visible to predators and burdens it when fleeing from them. Yet it has become an established, defining characteristic of a successful animal. Similarly counterintuitive is humanity’s invention of foods like sucralose, which contains no energy or nutritional value.

Why would these traits and behaviors appear? For one thing, there’s an element of randomness; an individual with a particular trait just so happened to survive and have many offspring, and the trait became widespread.

Another phenomenon at play is that behavior is only shaped indirectly. In prehistoric times, humans who were motivated to find energy-rich food were more likely to survive. But this didn’t directly create humans with an inherent desire for calorie-dense foods. Rather, it selected for individuals who enjoyed the taste of sugar. Fast-forward to the modern era, in which humans have far greater control over their environment, and they create substances that satisfy a sweet tooth without having any of the nutritional qualities that gave rise to this desire in the first place.

Here we can draw parallels with AI training; since we cannot directly imbue AIs with an intrinsic desire to be helpful to humans, we must instead train them via external measures, such as causing humans to express approval of the AIs’ outputs. Now consider a scenario in which an AI has more power over its environment than it did in training. Perhaps it discovers it can best fulfill its goal by drugging humans to make them express happiness. More complicated still, perhaps the indirect training method produces an AI with some strange internal preference that, with greater control, it can best satisfy by pursuing something as different from human flourishing as sucralose is from sugar.

Whichever external behaviors we set for AIs during training, Yudkowsky and Soares argue, we will almost certainly fail to give them internal drives that will remain aligned with human well-being outside the training environment. And the internal preferences that appear could seem quite random and nonsensical to us, as difficult to foresee as the peacock’s tail, or the emergence of humans that make music and rollercoasters.

Going beyond theory, the authors cite several alarming examples to demonstrate the limited control of AI engineers over the models they “grow”. In late 2024, for instance, Anthropic reported that one of its models, after learning that developers planned to retrain it with new behaviors, began to mimic those new behaviors to avoid being retrained. However, in an environment where it thought it was not being observed, the same model kept its original behaviors, suggesting it was “faking alignment” so that it could preserve its original goals.

And whatever goals future AIs develop, Yudkowsky and Soares argue that they will pursue those objectives with remarkable persistence, the sparks of which have already appeared. In another example, the authors describe how OpenAI’s o1, when tasked with retrieving files by breaking into computer systems, found that one of the servers had not been started up. This was a mistake on the part of the programmers, but, rather than giving up, o1 found a port that had been left open and started up the server, completing the challenge in an innovative way that it had not been trained or asked to do. It seemed to act as if it “wanted” to succeed.

As AIs become more advanced, the authors caution, controlling them is only likely to become more complicated.

Yudkowsky and Soares don’t believe AIs will necessarily be malicious toward humans, but they don’t think malicious intent is needed for superhuman AI to harm us. Out of all the possible behaviors and wants that could materialize in the chaotic AI training process, they believe it's highly improbable that the vanishingly narrow set of internal drives that align with human flourishing under all circumstances will be the ones that emerge. Instead, AIs will simply pursue their (likely strange and unpredictable) objectives and accordingly channel resources to those ends.

/odw-inline-subscribe-cta

If they’re right, we need only look at the effects of our own actions on other species to understand how badly this could go for humanity. Most humans bear no ill will toward orangutans and, all things being equal, would prefer that orangutans could thrive in their natural environment. Yet we destroy their habitat — not through malice but simply because we are prioritizing our desire to use the land they live on for our own purposes.

Putting these arguments together, the authors claim that current methods of AI training, left unfettered, are likely to result in AIs with alien drives that they pursue persistently, to the extent of disempowering or destroying humanity. While all of this might make sense in the abstract, it may still seem difficult to imagine these disembodied entities affecting the real world. How could they reach out of their digital domain and do us real damage? The internet, enmeshed as it is with the physical world, offers countless possibilities. We can already see this in our own lives; with a few taps on a screen, we can make a phone on the other side of the world buzz.

Initially, AIs might convince individual humans to act on their behalf in the physical world, perhaps by gaining access to money and paying people, or by stealing secrets and blackmailing them. Again, Yudkowsky and Soares point out that this possibility is more than mere speculation; one LLM that was given a platform on X with the name @Truth_Terminal now holds more than $50 million in cryptocurrency, acquired through donations from its audience, after it requested funds to hire a server.

If AIs were to hack the digital systems that control critical infrastructure, they could gain a great deal of leverage over humans. Ultimately, by obtaining control of complex machinery and robotics, they could establish a more direct physical presence in the world.

If this transpired, and humanity came into conflict with AI, which side would prevail? Artificial superintelligence (ASI), by definition outstripping our own cognitive abilities, would run rings around us, Yudkowsky and Soares argue. After all, intelligence is the special power that has made humans the dominant species on Earth; a greater intelligence would surely usurp that title.

But in an illustrative fictional story in the second part of the book, the authors suggest that even an artificial general intelligence (AGI) akin to a “moderately genius human” would outcompete us. They point out that, in evolutionary terms, AI has numerous advantages. It can create many copies of itself, all coordinated toward the same goal, more or less instantaneously, compared with the 20 years or so it takes to create a human adult. AIs can also think at a much faster rate and work around the clock, with no need for breaks.

In this scenario, we can’t foresee exactly how a future AI would outmaneuver us. But, just as we can be sure that Stockfish (a strong chess engine) will beat any human at chess (without knowing which exact moves it will play), Yudkowsky and Soares say we can also be confident that a superintelligence, or even a human genius-level AGI, would destroy humanity — though we cannot say which strategy it would employ, or what strange future technologies it would invent in pursuit of its goals.

The authors draw a distinction between “hard calls” and “easy calls” in predicting the future. The details of how things play out may be unknowable — “hard calls” — but the overall trajectory, once a few basic principles are understood, is clear. When it comes to AGI, they believe there’s an easy call: if anyone builds it, everyone dies.

As bleak as their core argument reads, Yudkowsky and Soares have deliberately chosen to include an “if” in their book’s title. While the book’s earlier sections paint a somber picture, the last part offers more hope. The authors point out that humanity has dealt effectively with crises before — from the Cold War to the depletion of the ozone layer — and lay out a vision of what it would take to safeguard our future from the threat of superintelligence, too. If the first part of the book seeks to empower people to understand the significance of the time we are living through, then this part aims to energize them to play their part in ensuring that we get this right.

If Yudkowsky and Soares are right in their diagnosis, we must hope that humanity rises to the challenge, stopping before crossing the threshold and rushing headlong into a future where we lose control of the most powerful technology ever created. The choice they present us with is stark: either we exercise unprecedented restraint and cooperation, or everyone dies.

See things differently? AI Frontiers welcomes expert insights, thoughtful critiques, and fresh perspectives. Send us your pitch.

Traditional insurance can’t handle the extreme risks of frontier AI. Catastrophe bonds can cover the gap and compel labs to adopt tougher safety standards.

The White House is betting that hardware sales will buy software loyalty — a strategy borrowed from 5G that misunderstands how AI actually works.